How to train an MLP using the Pipeline

Import classes and define paths

[ ]:

from cetaceo.pipeline import Pipeline

from cetaceo.models import MLP

from cetaceo.data import HDF5Dataset

from cetaceo.evaluators import RegressionEvaluatorPlotter

from cetaceo.plotting import TrueVsPredPlotter

from cetaceo.utils import PathManager

from pathlib import Path

import torch

from sklearn.preprocessing import MinMaxScaler

import warnings

warnings.filterwarnings("ignore")

[2]:

DATA_DIR = Path.cwd().parent / "sample_data"

CASE_DIR = Path.cwd() / "results"

PathManager.create_directory(CASE_DIR / 'models')

PathManager.create_directory(CASE_DIR / 'hyperparameters')

PathManager.create_directory(CASE_DIR / 'plots')

Define sklearn scalers if needed

Here, we create 2 minmax scalers, one for scaling the inputs, and other for the outputs.

[3]:

x_scaler = MinMaxScaler()

y_scaler = MinMaxScaler()

Create datasets

For this example, we will use the airfoil data from the DLR paper. As the files are processed as .h5, a HDF5Dataset is needed. We create one for each dataset split.

[4]:

train_dataset = HDF5Dataset(src_file = str(DATA_DIR) + "/train.h5", x_scaler=x_scaler, y_scaler=y_scaler)

test_dataset = HDF5Dataset(src_file = str(DATA_DIR) + "/test.h5" , x_scaler=x_scaler, y_scaler=y_scaler)

valid_dataset = HDF5Dataset(src_file = str(DATA_DIR) + "/val.h5", x_scaler=x_scaler, y_scaler=y_scaler)

After creating the datasets, we can scale them because we passed the scalers on the constructors

[5]:

x, y = train_dataset[:]

train_dataset.scale_data()

valid_dataset.scale_data()

test_dataset.scale_data()

print("\tTrain dataset length: ", len(train_dataset))

print("\tTest dataset length: ", len(test_dataset))

print("\tValid dataset length: ", len(valid_dataset))

print("\tX, y train shapes:", x.shape, y.shape)

Train dataset length: 23283

Test dataset length: 23283

Valid dataset length: 11940

X, y train shapes: torch.Size([23283, 4]) torch.Size([23283, 1])

Evaluators and Plotters

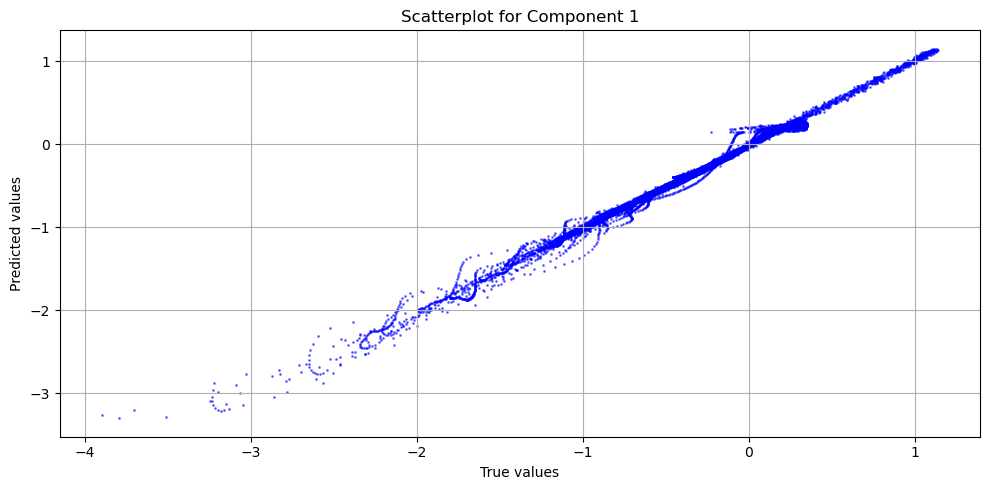

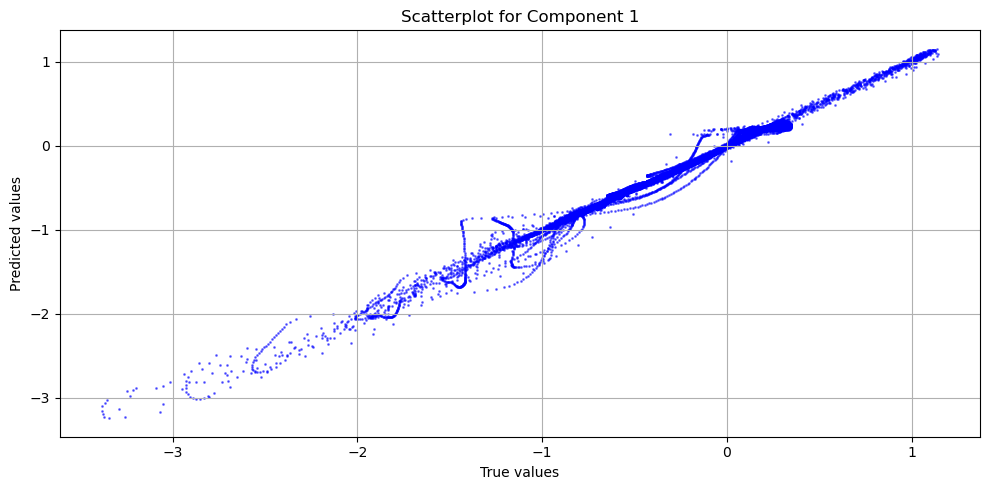

Here we define which evaluator to use. For this case we use a RegressionEvaluatorPlotter, which gives metrics related with regression problems. Additionally, this class can take as parameters a list of plotters, which are useful to create plots based on the model’s predictions

[6]:

plotters = [TrueVsPredPlotter()]

evaluator = RegressionEvaluatorPlotter(plots_path=CASE_DIR / 'plots', plotters=plotters)

Model creation

Now, the only thing left is creating the model. For this example we are using an MLP

[7]:

training_params = {

"epochs": 100,

"lr": 0.00126,

'lr_gamma': 0.966,

'lr_scheduler_step': 1,

'batch_size': 512,

"optimizer_class": torch.optim.Adam,

"print_rate": 1,

}

[8]:

model = MLP(

input_size=x.shape[1],

output_size=y.shape[1],

hidden_size=512,

n_layers=3,

p_dropouts=0.15

)

Run the pipeline

[9]:

pipeline = Pipeline(

train_dataset=train_dataset,

test_dataset=test_dataset,

model=model,

training_params=training_params,

evaluators=[evaluator],

)

pipeline.run()

Epoch 1/100 | Train loss (x1e5) 3968.2092 | Test loss (x1e5) 640.3254

Epoch 2/100 | Train loss (x1e5) 531.2284 | Test loss (x1e5) 268.4059

Epoch 3/100 | Train loss (x1e5) 363.7133 | Test loss (x1e5) 193.8889

Epoch 4/100 | Train loss (x1e5) 281.3932 | Test loss (x1e5) 117.6769

Epoch 5/100 | Train loss (x1e5) 219.3242 | Test loss (x1e5) 94.8438

Epoch 6/100 | Train loss (x1e5) 188.3426 | Test loss (x1e5) 66.5134

Epoch 7/100 | Train loss (x1e5) 162.8639 | Test loss (x1e5) 52.9106

Epoch 8/100 | Train loss (x1e5) 147.2162 | Test loss (x1e5) 43.3563

Epoch 9/100 | Train loss (x1e5) 133.1248 | Test loss (x1e5) 69.3100

Epoch 10/100 | Train loss (x1e5) 129.9856 | Test loss (x1e5) 40.7987

Epoch 11/100 | Train loss (x1e5) 116.6901 | Test loss (x1e5) 34.9828

Epoch 12/100 | Train loss (x1e5) 111.6007 | Test loss (x1e5) 30.6759

Epoch 13/100 | Train loss (x1e5) 107.0436 | Test loss (x1e5) 37.7164

Epoch 14/100 | Train loss (x1e5) 100.2151 | Test loss (x1e5) 31.0014

Epoch 15/100 | Train loss (x1e5) 97.2787 | Test loss (x1e5) 31.0849

Epoch 16/100 | Train loss (x1e5) 94.6964 | Test loss (x1e5) 43.5958

Epoch 17/100 | Train loss (x1e5) 91.4782 | Test loss (x1e5) 29.6458

Epoch 18/100 | Train loss (x1e5) 90.4933 | Test loss (x1e5) 33.6109

Epoch 19/100 | Train loss (x1e5) 86.9575 | Test loss (x1e5) 30.7852

Epoch 20/100 | Train loss (x1e5) 88.1818 | Test loss (x1e5) 29.2189

Epoch 21/100 | Train loss (x1e5) 84.4978 | Test loss (x1e5) 22.2148

Epoch 22/100 | Train loss (x1e5) 84.4161 | Test loss (x1e5) 35.0264

Epoch 23/100 | Train loss (x1e5) 80.5170 | Test loss (x1e5) 22.3276

Epoch 24/100 | Train loss (x1e5) 80.5800 | Test loss (x1e5) 29.2161

Epoch 25/100 | Train loss (x1e5) 77.0368 | Test loss (x1e5) 22.5613

Epoch 26/100 | Train loss (x1e5) 77.6624 | Test loss (x1e5) 28.3152

Epoch 27/100 | Train loss (x1e5) 75.9246 | Test loss (x1e5) 27.3747

Epoch 28/100 | Train loss (x1e5) 75.1129 | Test loss (x1e5) 22.1203

Epoch 29/100 | Train loss (x1e5) 73.9176 | Test loss (x1e5) 21.3708

Epoch 30/100 | Train loss (x1e5) 73.8303 | Test loss (x1e5) 19.4375

Epoch 31/100 | Train loss (x1e5) 71.4301 | Test loss (x1e5) 17.4285

Epoch 32/100 | Train loss (x1e5) 71.6028 | Test loss (x1e5) 30.2202

Epoch 33/100 | Train loss (x1e5) 70.7754 | Test loss (x1e5) 27.7524

Epoch 34/100 | Train loss (x1e5) 70.7107 | Test loss (x1e5) 21.8441

Epoch 35/100 | Train loss (x1e5) 67.9373 | Test loss (x1e5) 18.7597

Epoch 36/100 | Train loss (x1e5) 66.5284 | Test loss (x1e5) 21.3425

Epoch 37/100 | Train loss (x1e5) 69.2680 | Test loss (x1e5) 18.1248

Epoch 38/100 | Train loss (x1e5) 66.0457 | Test loss (x1e5) 18.3276

Epoch 39/100 | Train loss (x1e5) 66.4341 | Test loss (x1e5) 18.3668

Epoch 40/100 | Train loss (x1e5) 64.8588 | Test loss (x1e5) 14.3345

Epoch 41/100 | Train loss (x1e5) 65.0324 | Test loss (x1e5) 22.2836

Epoch 42/100 | Train loss (x1e5) 64.2346 | Test loss (x1e5) 20.3639

Epoch 43/100 | Train loss (x1e5) 63.5873 | Test loss (x1e5) 23.6401

Epoch 44/100 | Train loss (x1e5) 63.5231 | Test loss (x1e5) 16.7890

Epoch 45/100 | Train loss (x1e5) 62.1668 | Test loss (x1e5) 14.9163

Epoch 46/100 | Train loss (x1e5) 60.7827 | Test loss (x1e5) 17.4896

Epoch 47/100 | Train loss (x1e5) 62.4567 | Test loss (x1e5) 13.8604

Epoch 48/100 | Train loss (x1e5) 61.4397 | Test loss (x1e5) 17.9461

Epoch 49/100 | Train loss (x1e5) 60.8647 | Test loss (x1e5) 19.6511

Epoch 50/100 | Train loss (x1e5) 60.5594 | Test loss (x1e5) 13.8324

Epoch 51/100 | Train loss (x1e5) 59.3509 | Test loss (x1e5) 12.3390

Epoch 52/100 | Train loss (x1e5) 60.1447 | Test loss (x1e5) 14.2438

Epoch 53/100 | Train loss (x1e5) 59.2692 | Test loss (x1e5) 19.0007

Epoch 54/100 | Train loss (x1e5) 59.7058 | Test loss (x1e5) 13.2120

Epoch 55/100 | Train loss (x1e5) 59.4506 | Test loss (x1e5) 21.6260

Epoch 56/100 | Train loss (x1e5) 59.7846 | Test loss (x1e5) 14.1505

Epoch 57/100 | Train loss (x1e5) 59.1430 | Test loss (x1e5) 11.9013

Epoch 58/100 | Train loss (x1e5) 57.7920 | Test loss (x1e5) 18.3841

Epoch 59/100 | Train loss (x1e5) 58.3397 | Test loss (x1e5) 12.8296

Epoch 60/100 | Train loss (x1e5) 56.5668 | Test loss (x1e5) 15.5571

Epoch 61/100 | Train loss (x1e5) 57.3597 | Test loss (x1e5) 18.4371

Epoch 62/100 | Train loss (x1e5) 58.0973 | Test loss (x1e5) 12.8372

Epoch 63/100 | Train loss (x1e5) 56.9284 | Test loss (x1e5) 12.7845

Epoch 64/100 | Train loss (x1e5) 55.6151 | Test loss (x1e5) 15.2220

Epoch 65/100 | Train loss (x1e5) 56.3089 | Test loss (x1e5) 12.8679

Epoch 66/100 | Train loss (x1e5) 57.0098 | Test loss (x1e5) 11.3940

Epoch 67/100 | Train loss (x1e5) 55.8330 | Test loss (x1e5) 16.7325

Epoch 68/100 | Train loss (x1e5) 55.6434 | Test loss (x1e5) 13.6628

Epoch 69/100 | Train loss (x1e5) 55.7484 | Test loss (x1e5) 13.5055

Epoch 70/100 | Train loss (x1e5) 54.4978 | Test loss (x1e5) 12.0881

Epoch 71/100 | Train loss (x1e5) 55.6273 | Test loss (x1e5) 15.7260

Epoch 72/100 | Train loss (x1e5) 55.3004 | Test loss (x1e5) 13.6676

Epoch 73/100 | Train loss (x1e5) 53.8224 | Test loss (x1e5) 11.3997

Epoch 74/100 | Train loss (x1e5) 54.8473 | Test loss (x1e5) 11.9121

Epoch 75/100 | Train loss (x1e5) 54.4477 | Test loss (x1e5) 13.0294

Epoch 76/100 | Train loss (x1e5) 54.2868 | Test loss (x1e5) 11.7455

Epoch 77/100 | Train loss (x1e5) 53.2922 | Test loss (x1e5) 11.6593

Epoch 78/100 | Train loss (x1e5) 52.6854 | Test loss (x1e5) 14.3897

Epoch 79/100 | Train loss (x1e5) 52.8497 | Test loss (x1e5) 13.3810

Epoch 80/100 | Train loss (x1e5) 53.2287 | Test loss (x1e5) 11.8646

Epoch 81/100 | Train loss (x1e5) 54.0476 | Test loss (x1e5) 14.3325

Epoch 82/100 | Train loss (x1e5) 53.6350 | Test loss (x1e5) 13.7774

Epoch 83/100 | Train loss (x1e5) 53.7555 | Test loss (x1e5) 11.0659

Epoch 84/100 | Train loss (x1e5) 53.1362 | Test loss (x1e5) 13.7008

Epoch 85/100 | Train loss (x1e5) 52.4187 | Test loss (x1e5) 16.8150

Epoch 86/100 | Train loss (x1e5) 52.6289 | Test loss (x1e5) 13.3272

Epoch 87/100 | Train loss (x1e5) 51.3318 | Test loss (x1e5) 13.8505

Epoch 88/100 | Train loss (x1e5) 53.0107 | Test loss (x1e5) 12.2213

Epoch 89/100 | Train loss (x1e5) 51.8942 | Test loss (x1e5) 13.0393

Epoch 90/100 | Train loss (x1e5) 51.7824 | Test loss (x1e5) 12.1874

Epoch 91/100 | Train loss (x1e5) 51.4040 | Test loss (x1e5) 12.8719

Epoch 92/100 | Train loss (x1e5) 50.7001 | Test loss (x1e5) 10.9069

Epoch 93/100 | Train loss (x1e5) 51.5473 | Test loss (x1e5) 12.0467

Epoch 94/100 | Train loss (x1e5) 50.8814 | Test loss (x1e5) 13.0899

Epoch 95/100 | Train loss (x1e5) 51.5298 | Test loss (x1e5) 13.6348

Epoch 96/100 | Train loss (x1e5) 51.8971 | Test loss (x1e5) 14.7821

Epoch 97/100 | Train loss (x1e5) 51.7207 | Test loss (x1e5) 11.0060

Epoch 98/100 | Train loss (x1e5) 51.4634 | Test loss (x1e5) 10.5973

Epoch 99/100 | Train loss (x1e5) 51.1898 | Test loss (x1e5) 11.7234

Epoch 100/100 | Train loss (x1e5) 50.5890 | Test loss (x1e5) 10.9737

--------------------------------------------------

Metrics on train data:

--------------------------------------------------

Rescale output: True

Regression evaluator metrics:

mse: 0.0015

mae: 0.0246

mre: 15.6336%

ae_95: 0.0813

ae_99: 0.1536

r2: 0.9936

l2_error: 0.0668

--------------------------------------------------

Metrics on test data:

--------------------------------------------------

Rescale output: True

Regression evaluator metrics:

mse: 0.0028

mae: 0.0286

mre: 17.6299%

ae_95: 0.0993

ae_99: 0.2377

r2: 0.9885

l2_error: 0.0895

To save the model:

[10]:

model.save(path=str(CASE_DIR / "models"))